It is school enrollment and STAR (standardized testing and reporting) season in California so I want to dispel some myths that "everybody knows".

I spent Earth Day, last Friday, sewing with three friends. Between the four of us, we had earned 3 PhDs and were raising 4 kids. Unlike

most (famous) education reformers, we had gone to public schools and are sending (or plan to send) our kids to public schools.

Of course, we conversed about standardized testing and NCLB and the limits of what metrics can tell us.

Pennamite happens to have a PhD in Education, and she was surprised that anyone paid attention to the standardized test scores. I also wrangle data for a living and we discussed some glaring flaws in the data collection. People who actually looked at the data know that they are a better proxy for parental income and educational attainment than for teacher and school quality.

First, a bit of background.

The California Academic Performance Index, API, is a single number distillation of school performance based upon standardized test scores. The data is further distilled by binning the schools in deciles by API (10 highest, 1 lowest). After criticism that it is too simplistic, a second 1-10 number was introduced to represent school performance against schools with similar demographics, the similar schools rank.

How would you like your work performance reduced to a scale of 1-10 and published on the web with no place to explain the extenuating circumstances?

Magnet Yenta Sandra Tsing Loh (another science-trained mom) observed firsthand how real estate agents attempt to whip home-buying parents into a frenzy with school API and STAR test scores.

She wrote a book about it, and privately told me that most of it was absolutely true. Only a small portion was exaggerated for comic effect.

For instance, as local realtors will be happy to point out, everyone knows that the best school district in the beach cities is Manhattan Beach Unified. Redondo Beach schools are a mixed bag, but stay away from gang-infested

Adams Middle School in "felony flats" (our neighborhood school). The conventional wisdom had been that, if you must live within the Adams MS boundaries due to the lack of a half million or so in change, get your kids permits to attend

Parras MS (in south Redondo Beach) or

Manhattan Beach MS instead.

It's not just realtors. I was surprised to meet a new hire at work who spoke so authoritatively about the schools in our region, despite living 30 miles away. I asked her how she knew so much. She had been trolling the school test score websites. She felt like she knew the schools without ever stepping foot on a single campus.

Standardized test scores can give false impressions of schools and a false sense of security that one understands something that is very complex.

To prove that the conventional wisdom about STAR test scores is wrong, I will use--STAR test scores! Yes, I know that is ironic.

Anyway, let's compare the APIs of

Manhattan Beach MS (941) with

Adams MS (858). Notice the school demographics. MBMS students are 68% non-hispanic whites and AMS is 30% non-hispanic white.

(I am surprised that people will say in polite company that AMS has a gang problem, when there is absolutely no gang activity there. I think they are making assumptions based upon the melanin levels of the kids, but I am too polite to ask that people clarify what they mean by those remarks.)

Let's forget race for now and look at economic and class differences between the schools.

3% of the kids at MBMS are economically disadvantaged compared to 39% of the kids at AMS. 55% of the kids at MBMS have at least one parent with a graduate degree compared to 14% at AMS.

Like I wrote

here and

here, there is a huge amount of difference between going home to a 4000 square foot home with a full-time housekeeper AND a stay at home mom near parks and the beach and a one bedroom apartment with 5 other people in a neighborhood where kids get shot in the cross-fire in their front yards. It's hard to study effectively in the latter. (Though gang warfare is not a problem inside the AMS boundaries, some of the inter-district transfer students are trying to escape from those nearby neighborhoods.)

Wow, that was a long preamble to my rebuttal to real estate agents and trollers of greatschools.net.

It's late so I will just tackle the parental education angle. Take a look at the statistics for

The students at MBMS have higher test scores than the AMS. The difference narrows when comparing just the kids with parents with graduate degrees, but MBMS is still slightly higher. But, the language scores can easily be due to the much higher number of English learners at AMS.

Let's look closely at the math scores for just the kids with parents with graduate degrees (henceforth labeled "grad").

All 6th graders at both schools take the CST math test and MBMS edges out AMS with a mean score of 429.9 vs 417.9. But then the results are confounded by the different math classes that kids can take. Actually, let's just look at the data in tabular form.

Math 6, 7 and 8 mean regular math classes. When Bad Dad and I were students in California in the 1970s, the "smart" students took pre-Algebra in 7th grade and Algebra in the 8th grade. The regular kids took pre-Algebra in the 8th grade. 8th grade math was considered remedial. In AMS, the regular kids take pre-Algebra in 7th grade, a year earlier than at MBMS.

(But, Governor Schwarzenegger has moved the goal posts and wants them to push all CA students into Algebra by 8th grade, a year (or more) faster than a generation ago. He also does not make exception for kids with learning disabilities, hence the rush to create Algebra preparedness classes that qualify as Algebra for this purpose--but that's another story in itself.)

There is no standardized test for pre-Algebra so students who take pre-Algebra in 6th grade take the same 6th grade math test as the rest of the kids. Notice that, for all groups and schools, the kids that are accelerated in math score much higher than the students taking the same courses, but a year or so older. That suggests that the accelerated kids really are different than the rest, or that teaching advance math concepts at an early age helps them learn more. Unless we do a controlled experiment, we won't know.

We want kids that are good at math to be accelerated to a level that challenges them. The point of schooling is to teach the kids as much as they are capable of learning--not to maximize mean standardized test scores.

Adams Middle School 2010 STAR results

| # Grad | % Grad | Mean Grad | # All | % All | Mean All |

| Math 6 | 42 | 100 | 417.9 | 262 | 100 | 375.6 |

| Math 7 | 23 | 68 | 360.1 | 227 | 80.5 | 359.9 |

| Math 8 | 5 | 12 | * | 63 | 23 | 312.2 |

| Alg-1 7 | 11 | 32 | 454.8 | 55 | 19.5 | 425.7 |

| Alg-1 8 | 20 | 48 | 371.8 | 157 | 58 | 372.2 |

| Geo 8 | 17 | 40 | 427.2 | 51 | 19 | 433.4 |

| Alg-2 | 0 | 0 | * | 1 | 0 | * |

* means the score was withheld for subsets of < 10 students

Manhattan Beach Middle School 2010 STAR results

| # Grad | % Grad | Mean Grad | # All | % All | Mean All |

| Math 6 | 247 | 100 | 429.9 | 431 | 100 | 415 |

| Math 7 | 220 | 88 | 417.8 | 414 | 90 | 407.3 |

| Math 8 | 96 | 47 | 394.2 | 241 | 55 | 385.8 |

| Alg-1 7 | 31 | 12 | 496.7 | 44 | 9.6 | 496.9 |

| Alg-1 8 | 87 | 42 | 471.7 | 136 | 35 | 470.5 |

| Geo 8 | 23 | 11 | 458.4 | 36 | 9.3 | 456.1 |

| Alg-2 | 0 | 0 | 0 | 0 | 0 | 0 |

Notice that 77% of all kids at AMS, the school with the lower API ranking and the one labeled by realtors as "worse", met the new standards. Of those, 19% took Geometry in 8th grade, exceeding the new goal and besting their parents by 1-2 years. In contrast, only 45% and 9.3% of the all kids at the "better" school did the same.

Why are only half as many kids in the "better" school taking the same difficult classes as the kids at the "worse" school, despite having the advantage of coming from wealthier homes with better-educated parents?

It's even more dramatic for the "grad" kids. At AMS, 88% of those kids had taken Algebra 1 and 40% of those had taken Geometry while one had taken Algebra 2 (3 years ahead of my generation). In contrast, at MBMS, only 54% of the kids had taken Algebra by 8th grade; of those, 11% made it to Geometry.

Remember that 8th grade math, under the new state standards, is remedial math (though it was par a generation ago). So 55% of the kids in the "better" school took remedial math, including 47% of the "grad" kids. At the "worse" school, it is 23% and 12% respectively.

Most damning, the "grad" kids at MBMS are 4.2 times more likely to take the Math 8 exam than the Geometry exam. At AMS, the "grad" kids are 4.6 times more likely to take the Geometry exam than the Math 8 exam.

Yes, the mean test scores for nearly all subjects and groups is higher at MBMS than at AMS, but the kids are taking different classes and tests! That is, if you move 19.5% of the highest performing kids into pre-Algebra instead of Math 7, the mean test scores for the remaining kids in Math 7 will go down.

A high median test score hides a multitude of sins.

On the flip side, selecting only 9.6% of the kids for pre-Algebra in 7th grade probably allows you to cover more material in the school year. The higher test scores at MBMS in the Algebra and Geometry sequence are a result of this more selective/elitist approach.

Which is better? My conclusion is that neither school is "better". They have different demographics and different philosophies.

But, if you want to increase your kids' chances of being given the opportunity to take more difficult math classes, you might be better off in the cheaper neighborhood with the lower median API scores. You can use the half million of so you save by doing so for sending your kids to college and funding your retirement.

Looking more globally, if you want a student population that has been exposed to more difficult math before college, then the AMS approach will better meet those goals. Study after study has shown that success in college, particularly for under-represented minorities, is strongly correlated with mastery of Algebra. Correlation does not imply causality, but who will come out against taking tougher courses before college?

Addendum:

I've written plenty about educational statistics. Look for the

Education and

Statistics tags.

School Correlations is another lengthy interpretation of school test scores.

Aside:

Additionally, the test scores don't tell you how much class time was devoted to the state standards and preparing for the tests. That is, some teachers may teach the more able student material beyond the state standards. If the standardized tests don't measure those content areas, they won't get credit for their work.

I don't know what happens at MBMS. But, at AMS, the kids taking pre-Algebra skip the 6th grade math text, diving directly into the 7th grade pre-Algebra textbook. (This is possible because of the "spiral learning" vogue in which concepts are repeatedly re-introduced with greater complexity each time. The 4th, 5th and 6th grade math books look cursorily similar because they cover the same territory.)

Adams students see the 6th grade textbook only for the three weeks before the state test, and only to work exercises in a few of the chapters not covered again in the pre-Algebra book. There is some test-prep, but not an excessive amount. I don't consider reviewing 6th grade math in 3 weeks an excessive amount of test prep, especially when the rest of the year is spent accelerating the kids a year ahead in math.

Bad Dad reminded me that MBMS kids are much more likely to be

red-shirted in Kindergarten than AMS kids. MBUSD kids are about 6 months older than their RBUSD counterparts in every grade. That's great for athletics and gives a boost in test scores at the primary level, but that puts the MBMS kids even further behind in math.

Don't judge a school by median test scores.

Follow-up:

More thoughts on STAR testing

STAR test scores and external influences

When Iris saw me in the dress, she squealed that this is very, very Rachel. We are a Gleek family.

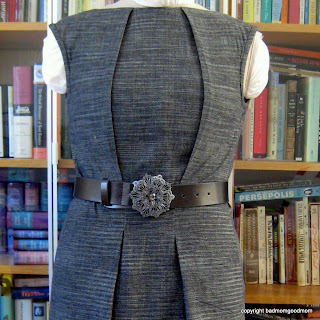

When Iris saw me in the dress, she squealed that this is very, very Rachel. We are a Gleek family. The dress back. I finally understand the topology involved in the Fashion Incubator centered zipper tutorial . But there was a slight problem with the execution. ;-)

The dress back. I finally understand the topology involved in the Fashion Incubator centered zipper tutorial . But there was a slight problem with the execution. ;-) Afterwards, I discovered that I could pull the dress on over my head without opening up the zip. I could have saved myself a lot of work, but wouldn't have learned so much.

Afterwards, I discovered that I could pull the dress on over my head without opening up the zip. I could have saved myself a lot of work, but wouldn't have learned so much. I wanted to make myself a little something from recycled textiles for Earth Day. This dress is 95% recycled content, about half post-consumer waste. The bottom section is made from a men's thrift store poplin shirt. The top section is white quilting muslin lined in cotton voile. The only new stuff is thread.

I wanted to make myself a little something from recycled textiles for Earth Day. This dress is 95% recycled content, about half post-consumer waste. The bottom section is made from a men's thrift store poplin shirt. The top section is white quilting muslin lined in cotton voile. The only new stuff is thread. Their dress is quite snug and I went up from my usual 38/40 to 40/42 to get the ease I normally like.

Their dress is quite snug and I went up from my usual 38/40 to 40/42 to get the ease I normally like. This pattern was rated beginner. But BWOF instructions were never clear for the beginner. If you need directions to make such a simple dress, then you won't be able to decipher these instructions. ;-)

This pattern was rated beginner. But BWOF instructions were never clear for the beginner. If you need directions to make such a simple dress, then you won't be able to decipher these instructions. ;-) I am not sure this issue has any more distinct patterns that the new Burda Style magazine. There are many variations on the same theme.

I am not sure this issue has any more distinct patterns that the new Burda Style magazine. There are many variations on the same theme. As usual, the most classic patterns are for plus size.

As usual, the most classic patterns are for plus size.